Overview

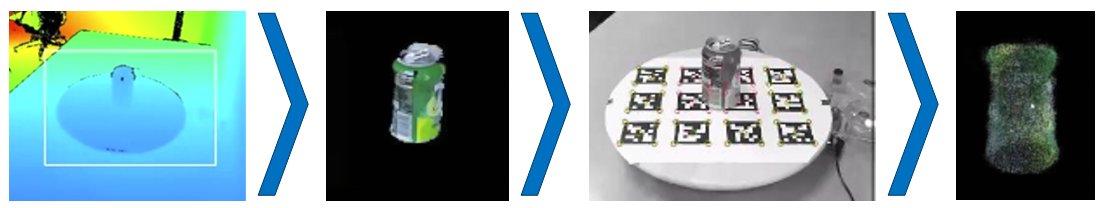

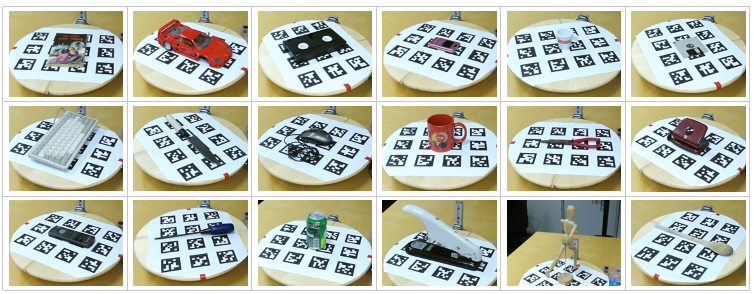

Our dataset was captured using a Microsoft Kinect One, and provides up to 90 frame pairs of RGB and Depth images for each captured object, its corresponding registered and segmented point cloud and a polygon mesh. We captured over 200 common household objects. These range from cups and dishes, to staplers, ash trays and so on

Our data collection approach presents an easy to build solution that can be easily replicated by the scientific community, or even by common users.